Texture mapping

Clash Royale CLAN TAG#URR8PPP

Clash Royale CLAN TAG#URR8PPP

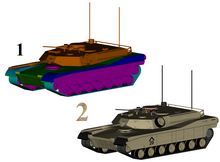

1: 3D model without textures

2: 3D model with textures

Texture mapping[1][2][3] is a method for defining high frequency detail, surface texture, or color information on a computer-generated graphic or 3D model. Its application to 3D graphics was pioneered by Edwin Catmull in 1974.[4]

Texture mapping originally referred to a method (now more accurately called diffuse mapping) that simply wrapped and mapped pixels from a texture to a 3D surface. In recent decades the advent of multi-pass rendering and complex mapping such as height mapping, bump mapping, normal mapping, displacement mapping, reflection mapping, specular mapping, mipmaps, occlusion mapping, and many other variations on the technique (controlled by a materials system) have made it possible to simulate near-photorealism in real time by vastly reducing the number of polygons and lighting calculations needed to construct a realistic and functional 3D scene.

Examples of multitexturing (click for larger image);

1: Untextured sphere, 2: Texture and bump maps, 3: Texture map only, 4: Opacity and texture maps.

Contents

1 Texture maps

1.1 Authoring

1.2 Texture application

1.2.1 Texture space

1.3 Multitexturing

1.4 Texture Filtering

1.5 Baking

2 Rasterisation algorithms

2.1 Forward texture mapping

2.2 Inverse texture mapping

2.3 Affine texture mapping

2.4 Perspective correctness

2.5 Restricted camera rotation

2.6 Subdivision for perspective correction

2.6.1 World space subdivision

2.6.2 Screen space subdivision

2.6.3 Other techniques

2.7 Hardware implementations

3 Applications

3.1 Tomography

3.2 User interfaces

4 See also

5 References

6 External links

Texture maps

A texture map[5][6] is an image applied (mapped) to the surface of a shape or polygon.[7] This may be a bitmap image or a procedural texture. They may be stored in common image file formats, referenced by 3d model formats or material definitions, and assembled into resource bundles.

They may have 1-3 dimensions, although 2 dimensions are most common for visible surfaces. For use with modern hardware, texture map data may be stored in swizzled or tiled orderings to improve cache coherency. Rendering APIs typically manage texture map resources (which may be located in device memory) as buffers or surfaces, and may allow 'render to texture' for additional effects such as post processing, environment mapping.

They usually contain RGB color data (either stored as direct color, compressed formats, or indexed color), and sometimes an additional channel for alpha blending (RGBA) especially for billboards and decal overlay textures. It is possible to use the alpha channel (which may be convenient to store in formats parsed by hardware) for other uses such as specularity.

Multiple texture maps (or channels) may be combined for control over specularity, normals, displacement, or subsurface scattering e.g. for skin rendering.

Multiple texture images may be combined in texture atlases or array textures to reduce state changes for modern hardware. (They may be considered a modern evolution of tile map graphics). Modern hardware often supports cube map textures with multiple faces for environment mapping.

Authoring

Texture maps may be acquired by scanning/digital photography, authored in image manipulation software such as GIMP, Photoshop, or painted onto 3D surfaces directly in a 3D paint tool such as Mudbox or zbrush.

Texture application

This process is akin to applying patterned paper to a plain white box. Every vertex in a polygon is assigned a texture coordinate (which in the 2d case is also known as UV coordinates).

This may be done through explicit assignment of vertex attributes, manually edited in a 3D modelling package through UV unwrapping tools. It is also possible to associate a procedural transformation from 3d space to texture space with the material. This might be accomplished via planar projection or, alternatively, cylindrical or spherical mapping. More complex mappings may consider the distance along a surface to minimize distortion.

These coordinates are interpolated across the faces of polygons to sample the texture map during rendering.

Textures may be repeated or mirrored to extend a finite rectangular bitmap over a larger area, or they may have a one-to-one unique "injective" mapping from every piece of a surface (which is important for render mapping and light mapping, also known as baking).

Texture space

Texture mapping maps the model surface (or screen space during rasterization) into texture space; in this space, the texture map is visible in its undistorted form. UV unwrapping tools typically provide a view in texture space for manual editing of texture coordinates. Some rendering techniques such as subsurface scattering may be performed approximately by texture-space operations.

Multitexturing

Multitexturing is the use of more than one texture at a time on a polygon.[8] For instance, a light map texture may be used to light a surface as an alternative to recalculating that lighting every time the surface is rendered. Microtextures or detail textures are used to add higher frequency details, and dirt maps may add weathering and variation; this can greatly reduce the apparent periodicity of repeating textures. Modern graphics may use more than 10 layers, which are combined using shaders, for greater fidelity. Another multitexture technique is bump mapping, which allows a texture to directly control the facing direction of a surface for the purposes of its lighting calculations; it can give a very good appearance of a complex surface (such as tree bark or rough concrete) that takes on lighting detail in addition to the usual detailed coloring. Bump mapping has become popular in recent video games, as graphics hardware has become powerful enough to accommodate it in real-time.[9]

Texture Filtering

The way that samples (e.g. when viewed as pixels on the screen) are calculated from the texels (texture pixels) is governed by texture filtering. The cheapest method is to use the nearest-neighbour interpolation, but bilinear interpolation or trilinear interpolation between mipmaps are two commonly used alternatives which reduce aliasing or jaggies. In the event of a texture coordinate being outside the texture, it is either clamped or wrapped. Anisotropic filtering better eliminates directional artefacts when viewing textures from oblique viewing angles.

Baking

As an optimization, it is possible to render detail from a high resolution model or expensive process (such as global illumination) into a surface texture (possibly on a low resolution model). This is also known as render mapping. This technique is most commonly used for lightmapping but may also be used to generate normal maps and displacement maps. Some video games (e.g. Messiah) have used this technique. The original Quake software engine used on-the-fly baking to combine light maps and colour texture-maps ("surface caching").

Baking can be used as a form of level of detail generation, where a complex scene with many different elements and materials may be approximated by a single element with a single texture which is then algorithmically reduced for lower rendering cost and fewer drawcalls. It is also used to take high detail models from 3D sculpting software and point cloud scanning and approximate them with meshes more suitable for realtime rendering.

Rasterisation algorithms

Various techniques have evolved in software and hardware implementations. Each offers different trade-offs in precision, versatility and performance.

Forward texture mapping

Some hardware systems e.g. Sega Saturn and the NV1 traverse texture coordinates directly, interpolating the projected position in screen space through texture space and splatting the texels into a frame buffer. (in the case of the NV1, quadratic interpolation was used allowing curved rendering). Sega provided tools for baking suitable per-quad texture tiles from UV mapped models.

This has the advantage that texture maps are read in a simple linear fashion.

Forward texture mapping may also sometimes produce more natural looking results than affine texture mapping if the primitives are aligned with prominent texture directions (e.g. road markings or layers of bricks). This provides a limited form of perspective correction. However, perspective distortion is still visible for primitives near the camera (e.g. the Saturn port of Sega Rally exhibited texture-squashing artifacts as nearby polygons were near clipped without UV coordinates).

This technique is not used in modern hardware because UV coordinates have proved more versatile for modelling and more consistent for clipping.

Inverse texture mapping

Most approaches use inverse texture mapping, which traverses the rendering primitives in screen space whilst interpolating texture coordinates for sampling. This interpolation may be affine or perspective correct. One advantage is that each output pixel is guaranteed to only be traversed once; generally the source texture map data is stored in some lower bit-depth or compressed form whilst the frame buffer uses a higher bit-depth. Another is greater versatility for UV mapping. A texture cache becomes important for buffering reads, since the memory access pattern in texture space is more complex.

Affine texture mapping

Because affine texture mapping does not take into account the depth information about a polygon's vertices, where the polygon is not perpendicular to the viewer it produces a noticeable defect.

Affine texture mapping is cheapest to linearly interpolate texture coordinates across a surface. Some software and hardware systems (such as the original PlayStation), project 3D vertices onto the screen during rendering and linearly interpolate the texture coordinates in screen space between them (inverse-texture mapping). This may be done by incrementing fixed point UV coordinates or by an incremental error algorithm akin to Bresenham's line algorithm.

This leads to noticeable distortion with perspective transformations (see figure – textures (the checker boxes) appear bent), especially as primitives near the camera. Such distortion may be reduced with subdivision.

Perspective correctness

Perspective correct texturing accounts for the vertices' positions in 3D space rather than simply interpolating coordinates in 2D screen space.[10] This achieves the correct visual effect but it is more expensive to calculate.[10] Instead of interpolating the texture coordinates directly, the coordinates are divided by their depth (relative to the viewer) and the reciprocal of the depth value is also interpolated and used to recover the perspective-correct coordinate. This correction makes it so that in parts of the polygon that are closer to the viewer the difference from pixel to pixel between texture coordinates is smaller (stretching the texture wider) and in parts that are farther away this difference is larger (compressing the texture).

- Affine texture mapping directly interpolates a texture coordinate uαdisplaystyle u_alpha ^

between two endpoints u0displaystyle u_0^

and u1displaystyle u_1^

:

uα=(1−α)u0+αu1displaystyle u_alpha ^=(1-alpha )u_0+alpha u_1where 0≤α≤1displaystyle 0leq alpha leq 1

- Perspective correct mapping interpolates after dividing by depth zdisplaystyle z_^

, then uses its interpolated reciprocal to recover the correct coordinate:

- uα=(1−α)u0z0+αu1z1(1−α)1z0+α1z1displaystyle u_alpha ^=frac (1-alpha )frac u_0z_0+alpha frac u_1z_1(1-alpha )frac 1z_0+alpha frac 1z_1

- uα=(1−α)u0z0+αu1z1(1−α)1z0+α1z1displaystyle u_alpha ^=frac (1-alpha )frac u_0z_0+alpha frac u_1z_1(1-alpha )frac 1z_0+alpha frac 1z_1

All modern[when?] 3D graphics hardware implements perspective correct texturing.

Doom renders vertical and horizontal spans with affine texture mapping, and is therefore unable to draw ramped floors or slanted walls.

Various techniques have evolved for rendering texture mapped geometry into images with different quality/precision tradeoffs, which can be applied to both software and hardware.

Classic software texture mappers generally did only simple mapping with at most one lighting effect (typically applied through a lookup table), and the perspective correctness was about 16 times more expensive.

Restricted camera rotation

The Doom engine restricted the world to vertical walls and horizontal floors/ceilings, with a camera that could only rotate about the vertical axis. This meant the walls would be a constant depth coordinate along a vertical line and the floors/ceilings would have a constant depth along a horizontal line. A fast affine mapping could be used along those lines because it would be correct. Some later renderers of this era simulated a small amount of camera pitch with shearing which allowed the appearance of greater freedom whilst using the same rendering technique.

Some engines were able to render texture mapped Heightmaps (e.g. Nova Logic's Voxel Space, and the engine for outcast) via Bresenham-like incremental algorithms, producing the appearance of a texture mapped landscape without the use of traditional geometric primitives.[11]

Subdivision for perspective correction

Every triangle can be further subdivided into groups of about 16 pixels in order to achieve two goals. First, keeping the arithmetic mill busy at all times. Second, producing faster arithmetic results.

World space subdivision

For perspective texture mapping without hardware support, a triangle is broken down into smaller triangles for rendering and affine mapping is used on them. The reason this technique works is that the distortion of affine mapping becomes much less noticeable on smaller polygons. The Sony Playstation made extensive use of this because it only supported affine mapping in hardware but had a relatively high triangle throughput compared to its peers.

Screen space subdivision

Software renderers generally preferred screen subdivision because it has less overhead. Additionally, they try to do linear interpolation along a line of pixels to simplify the set-up (compared to 2d affine interpolation) and thus again the overhead (also affine texture-mapping does not fit into the low number of registers of the x86 CPU; the 68000 or any RISC is much more suited).

A different approach was taken for Quake, which would calculate perspective correct coordinates only once every 16 pixels of a scanline and linearly interpolate between them, effectively running at the speed of linear interpolation because the perspective correct calculation runs in parallel on the co-processor.[12] The polygons are rendered independently, hence it may be possible to switch between spans and columns or diagonal directions depending on the orientation of the polygon normal to achieve a more constant z but the effort seems not to be worth it.

Screen space sub division techniques. Top left: Quake-like, top right: bilinear, bottom left: const-z

Other techniques

Another technique was approximating the perspective with a faster calculation, such as a polynomial. Still another technique uses 1/z value of the last two drawn pixels to linearly extrapolate the next value. The division is then done starting from those values so that only a small remainder has to be divided[13] but the amount of bookkeeping makes this method too slow on most systems.

Finally, the Build engine extended the constant distance trick used for Doom by finding the line of constant distance for arbitrary polygons and rendering along it.

Hardware implementations

Texture mapping hardware was originally developed for simulation (e.g. as implemented in the Evans and Sutherland ESIG image generators), and professional graphics workstations such as Silicon Graphics, broadcast digital video effects machines such as the Ampex ADO and later appeared in arcade machines, consumer video game consoles, and PC graphics cards in the mid 1990s. In flight simulation, texture mapping provided important motion cues.

Modern Graphics processing units provide specialised fixed function units called texture samplers or texture mapping units to perform texture mapping, usually with trilinear filtering or better multi-tap anisotropic filtering and hardware for decoding specific formats such as DXTn. As of 2016, texture mapping hardware is ubiquitous as most SOCs contain a suitable GPU.

Some hardware combines texture mapping with hidden surface determination in tile based deferred rendering or scanline rendering; such systems only fetch the visible texels at the expense of using greater workspace for transformed vertices. Most systems have settled on the Z-buffer approach, which can still reduce the texture mapping workload with front to back sorting.

Applications

Beyond 3D rendering, the availability of texture mapping hardware has inspired its use for accelerating other tasks:

Tomography

It is possible to use texture mapping hardware to accelerate both the reconstruction of voxel data sets from tomographic scans, and to visualize the results[14]

User interfaces

Many user interfaces use texture mapping to accelerate animated transitions of screen elements, e.g. Expose in Mac OS X.

See also

- 2.5D

- 3D computer graphics

- Mipmap

- Materials system

- Parametrization

- Texture synthesis

- Texture atlas

Texture splatting – a technique for combining textures- Shader (computer graphics)

References

^ Wang, Huamin. "Texture Mapping" (PDF). department of Computer Science and Engineering. Ohio State University. Retrieved 2016-01-15..mw-parser-output cite.citationfont-style:inherit.mw-parser-output qquotes:"""""""'""'".mw-parser-output code.cs1-codecolor:inherit;background:inherit;border:inherit;padding:inherit.mw-parser-output .cs1-lock-free abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/6/65/Lock-green.svg/9px-Lock-green.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .cs1-lock-limited a,.mw-parser-output .cs1-lock-registration abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/d/d6/Lock-gray-alt-2.svg/9px-Lock-gray-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .cs1-lock-subscription abackground:url("//upload.wikimedia.org/wikipedia/commons/thumb/a/aa/Lock-red-alt-2.svg/9px-Lock-red-alt-2.svg.png")no-repeat;background-position:right .1em center.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registrationcolor:#555.mw-parser-output .cs1-subscription span,.mw-parser-output .cs1-registration spanborder-bottom:1px dotted;cursor:help.mw-parser-output .cs1-hidden-errordisplay:none;font-size:100%.mw-parser-output .cs1-visible-errorfont-size:100%.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registration,.mw-parser-output .cs1-formatfont-size:95%.mw-parser-output .cs1-kern-left,.mw-parser-output .cs1-kern-wl-leftpadding-left:0.2em.mw-parser-output .cs1-kern-right,.mw-parser-output .cs1-kern-wl-rightpadding-right:0.2em

^ http://www.inf.pucrs.br/flash/tcg/aulas/texture/texmap.pdf

^ "CS 405 Texture Mapping". www.cs.uregina.ca. Retrieved 22 March 2018.

^ Catmull, E. (1974). A subdivision algorithm for computer display of curved surfaces (PDF) (PhD thesis). University of Utah.

^ http://www.microsoft.com/msj/0199/direct3d/direct3d.aspx

^ "The OpenGL Texture Mapping Guide". homepages.gac.edu. Retrieved 22 March 2018.

^ Jon Radoff, Anatomy of an MMORPG, "Archived copy". Archived from the original on 2009-12-13. Retrieved 2009-12-13.CS1 maint: Archived copy as title (link)

^ Blythe, David. Advanced Graphics Programming Techniques Using OpenGL. Siggraph 1999. (see: Multitexture)

^ Real-Time Bump Map Synthesis, Jan Kautz1, Wolfgang Heidrichy2 and Hans-Peter Seidel1, (1Max-Planck-Institut für Informatik, 2University of British Columbia)

^ ab "The Next Generation 1996 Lexicon A to Z: Perspective Correction". Next Generation. No. 15. Imagine Media. March 1996. p. 38.

^ "Voxel terrain engine", introduction. In a coder's mind, 2005 (archived 2013).

^ Abrash, Michael. Michael Abrash's Graphics Programming Black Book Special Edition. The Coriolis Group, Scottsdale Arizona, 1997.

ISBN 1-57610-174-6 (PDF Archived 2007-03-11 at the Wayback Machine.) (Chapter 70, pg. 1282)

^ US 5739818, Spackman, John Neil, "Apparatus and method for performing perspectively correct interpolation in computer graphics", issued 1998-04-14

^ "texture mapping for tomography".

External links

- Introduction into texture mapping using C and SDL

Programming a textured terrain using XNA/DirectX, from www.riemers.net- Perspective correct texturing

Time Texturing Texture mapping with bezier lines

Polynomial Texture Mapping Interactive Relighting for Photos

3 Métodos de interpolación a partir de puntos (in spanish) Methods that can be used to interpolate a texture knowing the texture coords at the vertices of a polygon